Case Studies

Our ambitious research programs are helping to create robots that see and understand for the sustainable wellbeing of people and the environment in which they live.

A team of Centre researchers from all four partner universities have developed The Best of ACRV project, a repository that contains a selection of high-quality code produced by various Centre researchers over the last seven years.

The idea and vision behind this project is to provide a curated collection of some of the best work that has come out of ACRV and offer a central place where practitioners and researchers around the world can access ready-to-use, high-quality code for their robotic vision projects.

Centre Chief Investigator and Associate Professor, Niko Sünderhauf, said the repository is a great resource where data and open source software can be easily accessed and installed.

“Since 2014 hundreds of papers have been produced at conferences and journals by Centre researchers and these have been highly impactful and impressive.

“With ACRV ending we wanted to ensure this information was shared and left in a form that researchers around the world can use and build upon”.

“If you are working as an academic researcher or in industry and want to translate this research into an application, you can visit the repository and code is available that is easy-to-use.

“A great deal of work has gone into this project and we spent a lot of time updating the Centre’s older projects to ensure each had a PyTorch application that is usable. Training code is also available where possible to make it simple,” Associate Professor Sunderhauf said.

The Best of ACRV team included Steve Martin, Garima Samvedi, Yan Zuo, Ben Talbot, Robert Lee, Ben Meyer and Lachlan Nicholson.

The project will be available on the ACRV Legacy website – www.roboticvision.org/legacy – in 2021.

Photo credit: loops7, E+, Getty Images

Centre researchers have been using a robotic dog called Miro to test visual navigation research – specifically a technique called teach and repeat.

Created by the University of Sheffield, Miro can often be seen driving around the Centre’s lab, collecting images to help him learn and remember a particular route. This then allows it to repeat the same journey without getting lost while only using a small amount of computing power.

The idea is related to biologically inspired concepts of navigation in animals. For example bees with small and limited visual systems are able to remember the route they took to get from their hive to food and return back home.

Researchers are using these types of models to give robots similar navigation abilities. Autonomous route following is a very useful capability for robots and has applications such as drones repeatedly surveying an environment and could even be used for future robots exploring Mars where no GPS signal is available.

This research aims to develop a robust navigation capability that works not only for Miro, but for other robots too.

Photo credit: Dorian Tsai, ACRV Research Assistant

Centre researchers are using mini-autonomous car, Carlie, in a new research project to help growers improve yields.

Carlie has a mobile robotic platform that is a miniature version of a full-size autonomous car, with range and camera sensors and significant on-board compute capability. Researchers at the Centre’s University of Adelaide node are collaborating with the Australian Institute for Machine Learning (AIML) to put their mini autonomous vehicle to the test.

As part of this, Centre researcher Mohammad Mahdi Kazemi Moghaddam is leading an “off-road” navigation project, taking the mobile platform to a local vineyard.

These novel projects are being conducted as part of a wider group project under the supervision of Associate Investigator Qinfeng “Javen” Shi.

“In the off-road project, we aim to help Australian vineyards use AI and robotics to improve their final yield,” says Moghaddam.

“We’ve developed a GPS-based navigation method using the mini car to autonomously navigate through rows in a vineyard.”

“While navigating, the car captures footage of the vines and grapes. Using computer vision techniques, it can then perform grape counts, and measure canopy size and other key information to feed to our yield estimation models.”

The research includes “auto-park” training and “follow me” scenarios in the Lab. In the auto-park project, the final goal is to enable the mini car platform to park autonomously in various scenarios using just camera inputs.

This is different to the currently available systems in the sense that no other input sensors are used like proximity, LiDAR or even depth. This helps prevent a lot of design and engineering work and also reduces the computational load.

“In order to do this, we used the idea of Imitation Learning (IL),” says Moghaddam.

“In IL we try to learn a policy that maps the input (RGB image) directly to a distribution over possible control actions. The actions here are the steering wheel angle and speed.”

The training is based on samples from a human expert demonstrating the desired actions under different scenarios.

As the first step, the car learns to park in the nearest available spot keeping in the lane without hitting other cars. “This could be the future of valet-parking,” says Moghaddam.

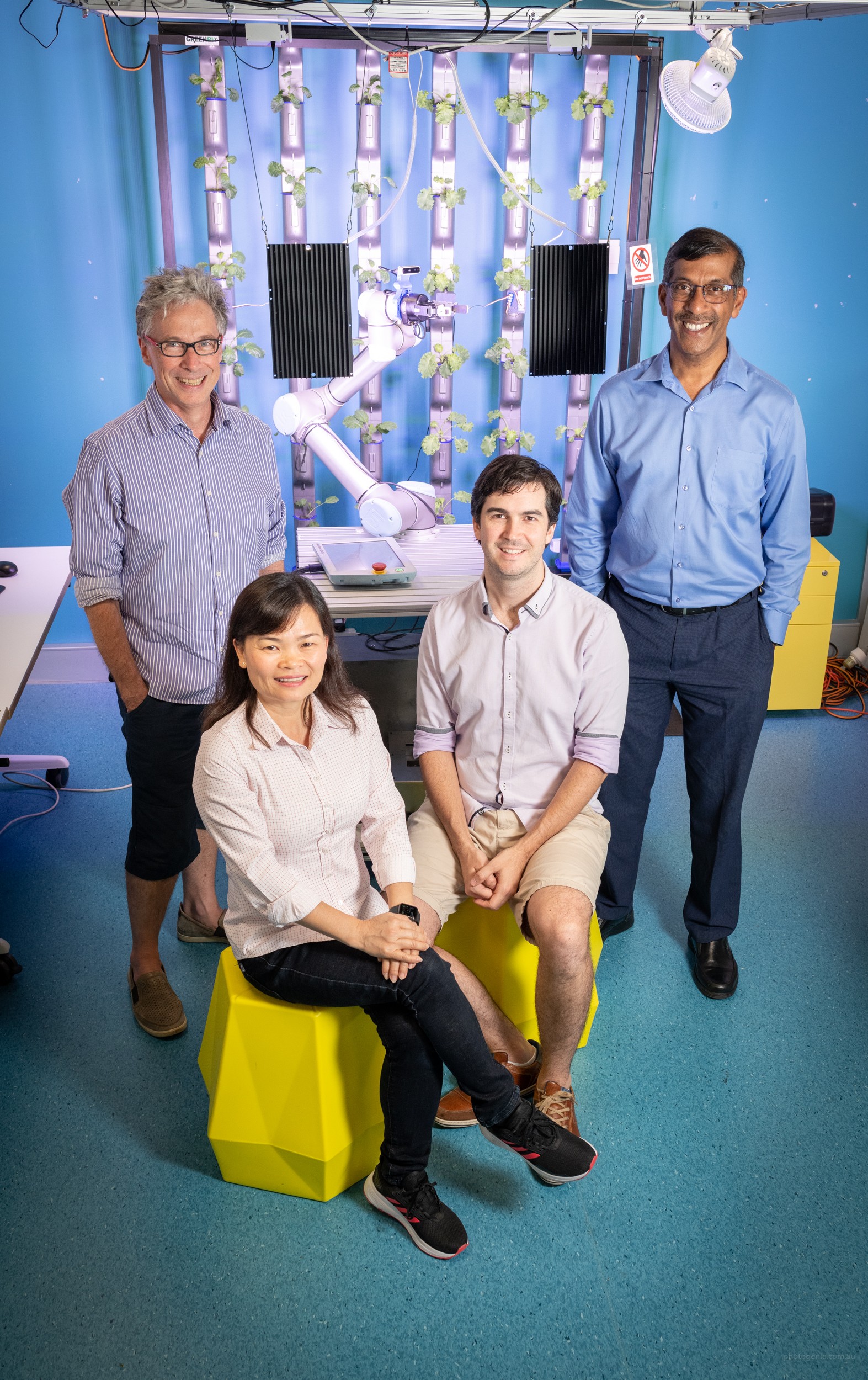

Centre researchers led by Associate Investigator and robotics expert Dr Chris Lehnert, are working with Greenbio Group to develop a novel modular vertical growing solution. The first phase, of the multi-stage ‘Modular vertical growing system’ project, is aimed at helping Greenbio design and validate configuration, automation and resource-efficiency solutions to practical challenges that the company has identified.

The project involves specific engineering solutions for growing systems that maximise production efficiency with regard to labour, water and energy usage; critical challenges that currently limit the wider adoption of intensive cropping in urbanised environments.

With these new systems and technologies, the company aims to ‘bring food production into cities, on a small or large scale’. Its automated vertical growing units will be available for purchase by industry clients worldwide.

Dr Lehnert views automated indoor cropping as a sustainable adjunct to, rather than a replacement for conventional farming. That said, he believes robotics and automation will make valuable contributions to Australia’s future agrifood sector.

“The future potential of robotics in indoor protected cropping will be their ability to intelligently sense, think and act in order to reduce production costs and maximise output value in terms of crop yield and quality,” Dr Lehnert says.

“Robotics taking action, such as autonomous harvesting within indoor protected cropping, will be a game-changer for growers who are struggling to reduce their production costs.”

Photo credit: Xavier Montaner, Corporate Photography Brisbane

QUT researchers are working on building the technology needed to help preserve, model, and monitor the harsh Antarctic environment and its wildlife.

Top experts from a range of fields including robotics, aim to provide practical solutions to the complex environmental challenges faced in the Antarctic. Their skills are being used as part of the new Australian Research Council’s Special Research Initiative for Excellence in Antarctic Science.

Researchers include QUT and Centre Chief Investigators, Distinguished Professor Peter Corke and Professor Matthew Dunbabin. Centre Associate Investigator Professor Felipe Gonzalez will also contribute to the project through his expertise in aeronautical engineering and artificial intelligence and optimisation.

Distinguished Professor Peter Corke said robotics will be used to create systems that fly, swim and drive in the hostile Antarctic environment.

“These robots will help researchers collect valuable data that will inform scientific models on how the continent is responding to climate change.

“This is a fantastic opportunity to use robotics and computer vision to help positively impact the world in which we live,” Professor Corke said.

The research will take place over the next seven years and will include collaborations with Monash University, University of Wollongong, University of New South Wales, James Cook University, University of Adelaide, the South Australian Museum, and the Western Australian Museum.

Photo credit: robertharding, Digital Vision, Getty Images

Can you imagine assembling IKEA furniture more than 170 times in one day! That’s just what a team of Centre researchers did in order to develop a perception system that assists humans building IKEA furniture with the help of a robot!

The “Humans, Robots and Actions” project involved 10 months of data collection and included hundreds of videos to enable action and activity recognition between humans and robots.

Team Leader and ANU Research Fellow Yizhak Ben-Shabat said the goal of the project was to develop methods that facilitate robot-human interaction and cooperation.

“In order to get the robot to help a person build IKEA furniture we had to look at the several perceptual challenges of human pose estimation, object tracking and segmentation to ultimately get a robot and human to cooperate.

“Building a piece of furniture can appear to be a rather simple task but in order to work with a robot we need a reliable perception system that can pick the correct objects up in the right order without failing.”

The project looked at three main technical thrusts… estimating, tracking and forecasting human pose; understanding human-object interaction; and inferring actions and activities in video.

“At one point the entire team and their families were gathered in a single space and we captured 170 assemblies in one day!

“Finally, at the end of 2020 our goal was achieved! A robot and human interacted perfectly to build a table and the dataset and paper were published and presented in Winter Conference on Applications of Computer Vision (WACV) 2021.

The team included Yizhak Ben-Shabat, Dr Xin Yu, Dr Fatemeh Saleh, Dr Dylan Campbell, Dr Cristian Rodriguez, Professor Hongdong Li and Professor Stephen Gould.

The Centre ran two challenges in 2020, organized by researchers: Niko Sünderhauf, Feras Dayoub, David Hall, Haoyang Zhang, Ben Talbot, Suman Bista, Rohan Smith and John Skinner. They included the Probabilistic Object Detection (PrOD) Challenge and the Scene Understanding Challenge.

Probabilistic Object Detection Challenge

If a robot moves with overconfidence in its environment … it is going to break things.

Object detection systems needed to move beyond a simple bounding box and class label score. In the Probabilistic Object Detection (PrOD) Challenge, participants were rewarded for providing accurate estimates of both spatial and semantic uncertainty for every detection using new probabilistic bounding boxes. Objects had to be detected within video data produced from high-fidelity simulations.

This challenge required participants to detect objects in video data produced from high-fidelity simulations.

The novelty of this challenge was that participants were rewarded for providing accurate estimates of both spatial and semantic uncertainty for every detection using probabilistic bounding boxes.

This challenge was associated with the ECCV 2020 Workshop, “Beyond mAP: Reassessing the Evaluation of Object Detectors”.

There were 15 submissions with first place being awarded to PhD student Dimity Miller from QUT. Ms Miller’s submission was titled, “Probabilistic Object Detection with an Ensemble of Experts”. Her results on the challenges dataset received the highest score on the evaluation metric Probability-based Detection Quality (PDQ).

Scene Understanding Challenge

The Centre’s Scene Understanding Challenge tasked competitors with creating systems that can understand the semantic and geometric aspects of an environment through two distinct tasks: Object-based Semantic SLAM, and Scene Change Detection.

The challenge provided high-fidelity, simulated environments for testing, at three difficulty levels, a simple AI Gym-style API enabling sim-to-real transfer, and a new evaluation measure for evaluating semantic object maps. All of this was enabled using the newly created BenchBot framework which was also developed by the Centre.

The BenchBot software stack is a collection of software packages that allow competitors to control robots in real or simulated environments with a simple Python API. It leverages the simple “observe, act, repeat” approach to robot problems prevalent in the reinforcement learning community.

BenchBot has been created as a tool to enable repeatable robotics research and has begun that work by powering the Scene Understanding Challenge.

COVID-19 posed a problem for broad participation in this challenge and as such it will be repeated in 2021 as part of the Embodied AI Workshop in the 2021 Conference on Computer Vision and Pattern Recognition.

Attracting women and girls to STEM and providing an environment for them to thrive and progress is essential to ensuring fields such as engineering continue to be at the forefront of industry and the economy.

Monash’s Dean of Engineering and Centre Chief Investigator, Professor Elizabeth Croft has made it her mission to ensure that women get the recognition they deserve and a 50:50 gender balance is achieved in the university’s engineering enrolments by 2025.

According to the latest figures from Engineers Australia, just 13.6 per cent of the engineering labour force are women, and half of the women who qualify as engineers never work in the profession.

“We don’t need to fix girls and their perceived lack of interest in engineering careers,” said Professor Croft. “It’s our fault as a profession, not theirs. We simply haven’t made engineering a compelling choice for girls to make.”

“As an economic unit, as a country, we are missing out in the value of half of our population if we don’t include girls in this really important part of our economy.

“Year 12 girls’ participation in advanced maths and physics – the fundamental tools needed for all engineering practice – drops to half the rates of boys. The figures simply won’t change unless we take fearless action on behalf of our girls” Professor Croft said.

Professor Croft is recognised internationally as an expert in the field of human-robot interaction. She also has an exceptional record of advancing women’s representation and participation in engineering. Most recently, as the Natural Sciences and Engineering Research Council Chair for Women in Science and Engineering, she worked with partners in funding agencies, industry and academia on comprehensive strategies to improve women’s participation and retention in the STEM disciplines at all levels.

At Robovis 2020 Professor Croft was given the Award for Contribution to Public Debate with her outstanding leadership on the topic of gender representation in STEM and tackling the barriers to female participation in engineering. Impressively she achieved a media reach of over 10 million people in 2020 alone!

“I don’t think we can take our foot off the pedal. I think there’s real danger in doing that.”

“We have to get to the point where it is, “Of course, women are going into engineering. Of course, women are going to be computer scientists. That’s normal. Women do that.”

Photo credit: Zaharoula Harris, Zed Photography

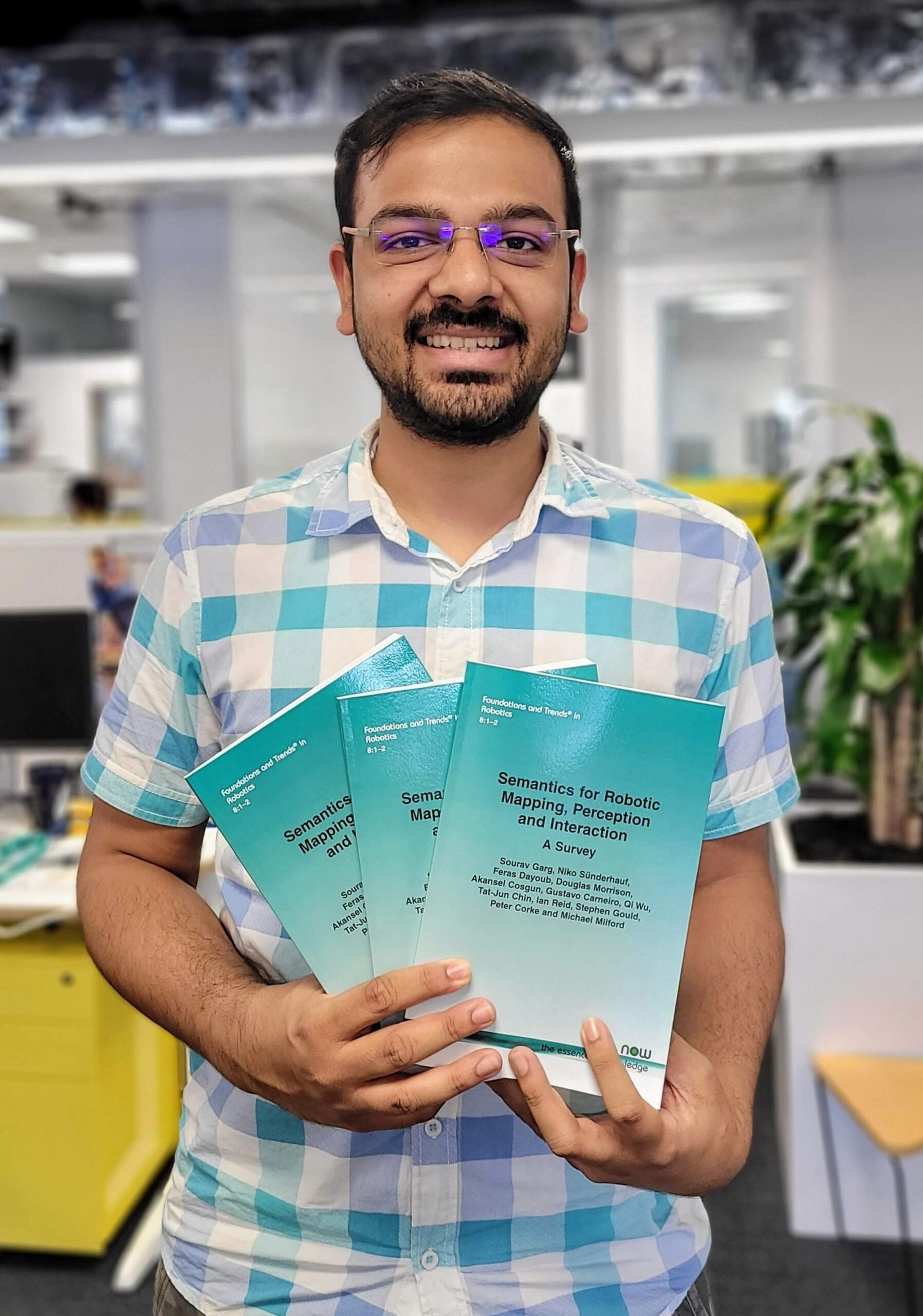

Twelve Centre researchers have spent the last 18 months reviewing over 900 papers to create the survey paper, “Semantics for Robotic Mapping, Perception and Interaction: A Survey.”

The mammoth effort led by QUT Research Fellow, Dr Sourav Garg, entailed a cross node collaboration from all four of the Centre’s partner universities.

Researchers reviewed an extensive number of research papers on semantics and robotics from all around the world. Dr Garg said he enjoyed watching the survey grow with incoming streams of knowledge from a diverse set of experts and co-authors.

“Unlike computer vision, robotics research is multi-faceted, where robust perception is only one of those facets.

“In this survey we connected the dots across several aspects of robotics in the context of semantic reasoning, which help readers to holistically understand what it would take to create intelligent robots,” Dr Garg said.

The invite-only paper will be published shortly in the Foundations and Trends in Robotics Journal and will be a fantastic resource for researchers in robotics and computer vision.

In an interesting Centre project this year, researchers built a virtual zoo to test the ability of robots to navigate real-world environments by seeing if they could track down the king of the jungle by using symbols and an abstract map.

In the study Robot Navigation in Unseen Spaces using an Abstract Map published in the journal IEEE Transactions on Cognitive & Developmental Systems, Dr Ben Talbot and a team of researchers including Dr Feras Dayoub, Distinguished Professor Peter Corke and Dr Gordon Wyeth, considered how robots might be able to navigate using the same navigation cues that people subconsciously use in built environments.

The researchers programmed an Adept GuiaBot robot with the ability to interpret signs and directional information using the abstract map, a novel navigation tool they developed to help robots navigate in unseen spaces.

Dr Talbot said in large-scale outdoor settings like roadways, GPS navigation could be used to help robots and machines navigate a space, but when it came to navigating built environments like university campuses and shopping complexes robots typically still rely on a pre-determined map or a trial and error method of finding its way around.

“As a human, if you come to a university campus for the first time, you don’t say “I can’t do it because I don’t have a map, or I’m not carrying a GPS,” Dr Talbot said.

“You would look around for human navigation cues and use that symbolic spatial information to help guide you to find a location.”

Dr Talbot said people relied on a range of navigation cues in getting around in an unfamiliar location, such as directional signs, verbal descriptions, sketch maps, and asking people to point them in the right direction.

He said the key for people navigating an unknown space was that their imagination of the space can guide them, without requiring them to have already experienced it.

The first time a person goes to a zoo, for instance, they are likely to turn up with the expectation that animals are located in logical groupings such as shared habitats. If they find koalas, for instance, they might expect to find kangaroos nearby. Similarly, they wouldn’t expect to find penguins in the desert-themed area.

“It’s this concept of when you’ve never been somewhere, you see information about a place and imagine some kind of map or spatial representation of what you think the place may look like,” Dr Talbot said.

“Then when you get there and see extra information, you adjust your map to improve its accuracy or navigational utility.

“But robots typically can’t navigate with this concept of a fuzzy map that doesn’t have any concreteness to it. This was the big contribution introduced in the abstract map; it allowed the robot to navigate using imaginations of spaces rather than requiring prior direct perception.”

Instead of explicit distances and directions that might be used by robots in navigating a rigidly structured environment like a warehouse, the GuiaBot scanned QR-like codes as it travelled around the virtual zoo to read directional information, such as “the African safari is past the information desk”.

While the robot proved slightly quicker than people who navigated the space using the same directional information, Dr Talbot said the limited sample size in the study meant the robot versus person comparison should not be over emphasised.

“It’s a meaningful result in that we can say performance was comparable to that of humans, but it’s not at a stage yet where we could drop a human and robot on a real campus and they could compete,” Dr Talbot said.

“We would like to eat away at some of those differences in the future though.”

Photo credit: QUT Media

For robots, grasping and manipulation is hard. However, a team of researchers have challenged technology using a robotic workstation demonstrator to showcase the Centre’s capability in vision-enabled robotic grasping.

The table-top manipulation demonstrator was challenged with everyday tasks including picking up an object, placing an object, and handing an object to a person. The focus of the research was for robots to master manipulation in unstructured and dynamic environments that reflected the unpredictability of the real world. However, to achieve this, the robot has to be able to integrate what it sees with how it moves. This allows it to operate effectively in the complex and every-changing world inhabited by humans, while also being robust enough to handle new tasks and new objects.

To showcase these research results the team also created an interactive demonstrator experience which includes the following: spoken voice call to action with different feedback based on the proximity of detected faces; a pick and place demo; compliant control demo where the robot opens and closes valves; and a hand-over demo where the robot passes an object to the user.

The demonstrator was then put through its paces at a number of different installations where it interacted with the public. These included the Advanced Robotics for Manufacturing Hub (ARMHub), The Cube QUT and the World of Drones Congress 2020.

In the final months of 2020, the team added additional capability developed by the Centre: an object recognition system based on RefineNet-lite, and an intelligent way to select objects and actions based on Visual Question and Answer technology from the Centres Vision & Language project.

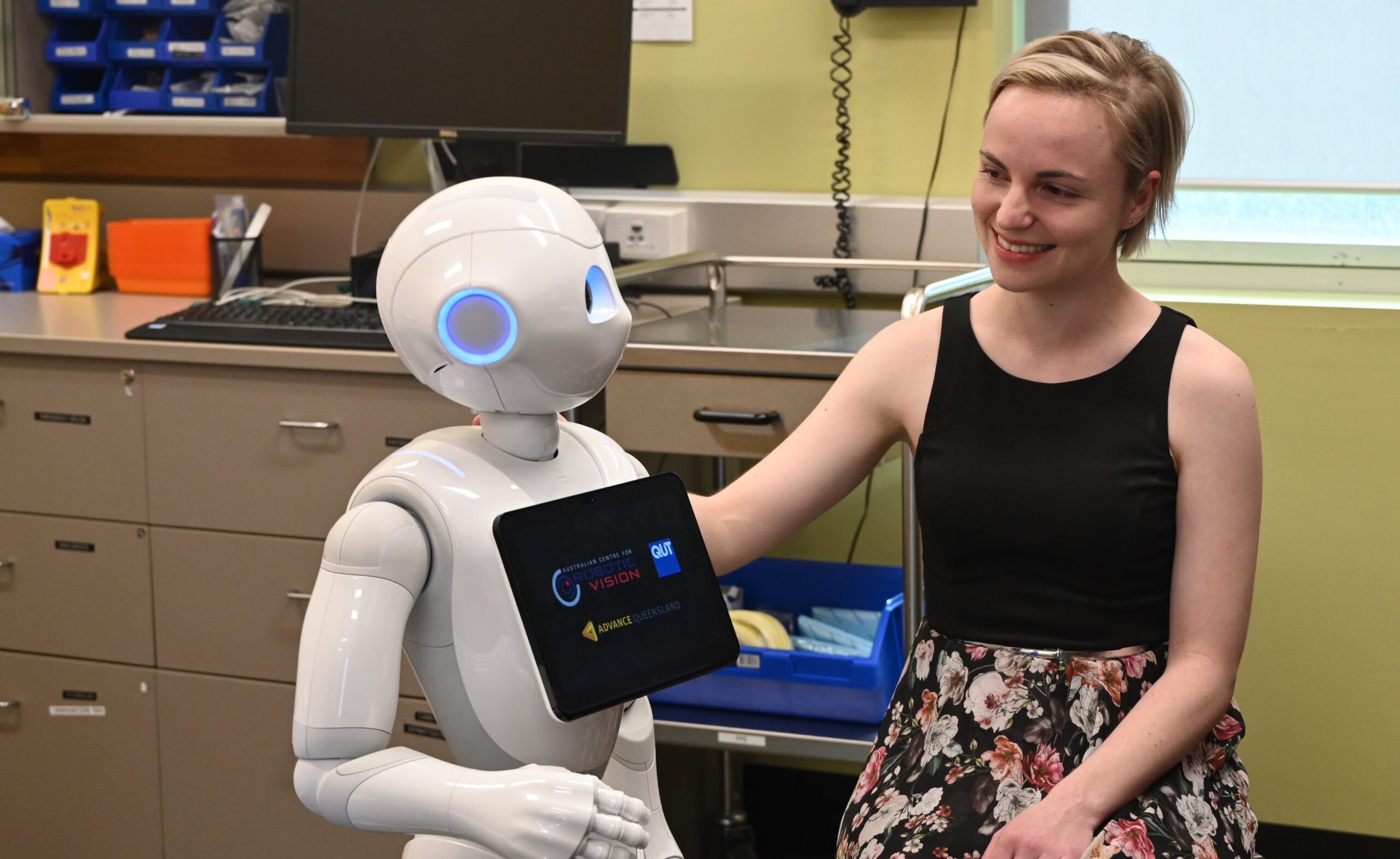

Australian Centre for Robotic Vision Research Fellow, Dr Nicole Robinson has found that small interactive robots are proving useful in helping people develop and implement healthy lifestyle plans, showing they can support weight loss and healthy habits.

The research project, led by Dr Robinson placed social robots with 26 participants to deliver a multi-session behaviour change treatment program around diet and weight reduction.

The robots then interacted with the participants to guide them through the treatment program by providing audible prompts. Results found the robot-delivered program helped people to achieve more than 50 per cent snack episode reduction and 4.4kg weight loss. Additionally, the project found that an autonomous robot-delivered program may be just as effective as a human clinician delivering a similar intervention.

“There has been previous work in digital health and wellbeing programs using smartphones and web-based digital applications. We were interested to see how a social robot might be able to support health and wellbeing – given that it has an embodiment, it looks like a companion, it can be in the room and talk to people,” Dr Robinson said.

The social robot program follows a health-coaching method known as functional imagery training, designed to prompt self-reflection.

The robot took participants through a series of questions designed to get them thinking about how they might make a change and create a plan to see positive change in their lifestyle. It then invited participants to interact with the robot and develop a goal to improve their eating habits.

“The robot was able to work with them to help come up with a plan. People were able to discuss a lifestyle behaviour that they wanted to change, and the robot helped them to come up with steps to start making that change,” Dr Robinson said.

Dr Robinson hopes to build on the study in order to explore if these sort of social robot interventions can be applied to other health areas.

“If a robot can be programmed to provide support to treatment, they become another piece of technology that can extend the reach of the clinician to provide greater support to both patients and practitioners,” Dr Robinson said.

Feature image photographed by Mike Smith, Mike Smith Pictures